Keynote

We are honored to introduce the Discovery Science 2024 keynote speakers, each a leading figure in their respective fields. These visionary scientists have made groundbreaking contributions that are shaping the future and their work continues to inspire innovation and discovery. Below, you will find a brief introduction to their remarkable careers and an overview of the insightful presentations they will share with us at this year’s conference.

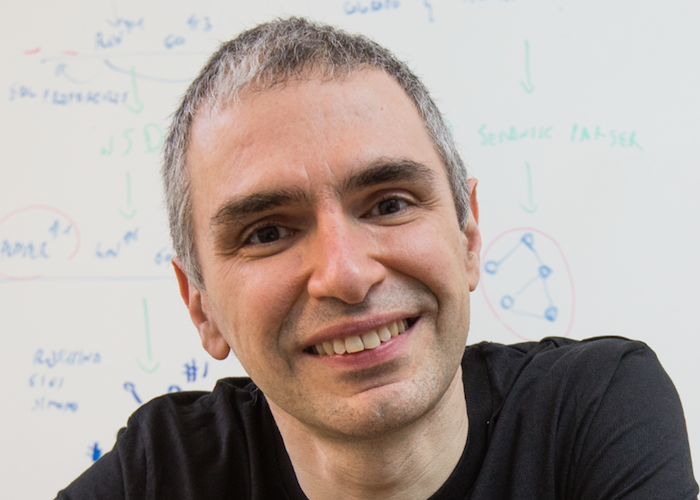

Roberto Navigli

Roberto Navigli

Sapienza University of Rome & Babelscape

Roberto Navigli is Professor of Computer Science at the Sapienza University of Rome, where he leads the Sapienza NLP Group. He has received two prestigious ERC grants in AI on multilingual word sense disambiguation (2011-2016) and multilingual language- and syntax-independent open-text unified representations (2017-2022), highlighted among the 15 projects through which the ERC transformed science. In 2015 he received the META prize for groundbreaking work in overcoming language barriers with BabelNet, a project also highlighted in The Guardian and Time magazine, and winner of the Artificial Intelligence Journal prominent paper award 2017 (and a subsequent AIJ prominent paper award in 2023 on the NASARI sense embeddings). He is the co-founder of Babelscape, a successful company which enables Natural Language Understanding in dozens of languages. He served as Associate Editor of the Artificial Intelligence Journal (2013-2020) and Program Chair of ACL-IJCNLP 2021. He will serve as General Chair of ACL 2025.

Keynote Day 2 - 15 October 2024 h12:00

Human Factors and Algorithmic Fairness

In this talk, we present ongoing research on human factors of decision support systems that has consequences from the perspective of algorithmic fairness. We study two different settings: a game and a high-stakes scenario. The game is an exploratory ``oil drilling'' game, while the high-stakes scenario is the prediction of criminal recidivism. In both cases, a decision support system helps a human make a decision. We observe that in general users of such systems must thread a fine line between algorithmic aversion (completely disregarding the algorithmic support) and automation bias (completely disregarding their own judgment). The talk presents joint work led by David Solans and Manuel Portela.

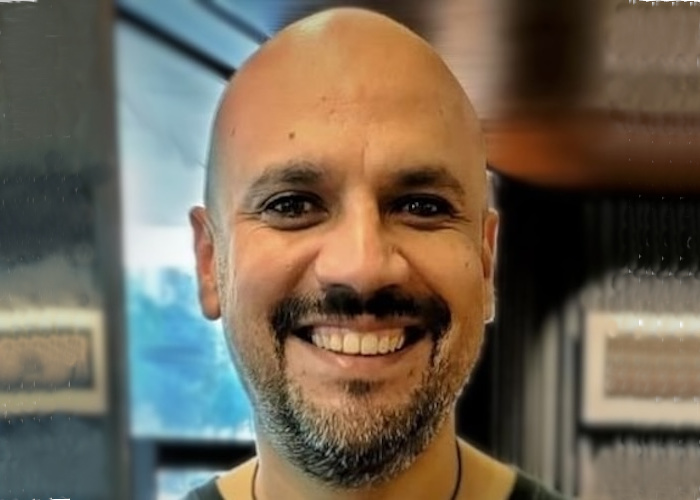

Carlos Castillo

Carlos Castillo

ICREA and Universitat Pompeu Fabra

Carlos Castillo (they/them) is an ICREA Research Professor at Universitat Pompeu Fabra in Barcelona, where they lead the Web Science and Social Computing research group. They are a web miner with a background in information retrieval and have been influential in the areas of crisis informatics, web content quality and credibility, and adversarial web search. They are a prolific, highly cited researcher who has co-authored over 110 publications in top-tier international conferences and journals, receiving two test-of-time awards, five best paper awards, and two best student paper awards. Their works include a book on Big Crisis Data, as well as monographs on Information and Influence Propagation, and Adversarial Web Search.

Keynote Day 3 - 16 October 2024 h12:00

Bridging Explainable AI and Contestability

AI has become pervasive in recent years, and the need for explainability is widely agreed upon as crucial towards safe and trustworthy deployment of AI systems. However, state-of-the-art AI and eXplainable AI (XAI) approaches mostly neglect the need for AI systems to be contestable, as advocated instead by AI guidelines (e.g. by the OECD) and regulation of automated decision-making (e.g. GDPR in the EU and UK). In this talk I will advocate forms of contestable AI that can (1) interact to progressively explain outputs and/or reasoning, (2) assess grounds for contestation provided by humans and/or other machines, and (3) revise decision-making processes to redress any issues successfully raised during contestation. I will then explore how contestability can be achieved computationally, starting from various approaches to explainability, including some drawn from the field of computational argumentation. Specifically, I will overview a number of approaches to (argumentation-based) XAI for neural models and for causal discovery and their uses to achieve contestability.

Francesca Toni

Francesca Toni

Imperial College London

Francesca Toni is Professor in Computational Logic and Royal Academy of Engineering/JP Morgan Research Chair on Argumentation-based Interactive Explainable AI (XAI) at the Department of Computing, Imperial College London, UK, as well as the founder and leader of the CLArg (Computational Logic and Argumentation) research group and of the Faculty of Engineering XAI Research Centre. She holds an ERC Advanced grant on Argumentation-based Deep Interactive eXplanations (ADIX). Her research interests lie within the broad area of Explainable AI, at the intersection of Knowledge Representation and Reasoning, Machine Learning, Computational Argumentation, Argument Mining, and Multi-Agent Systems. She is EurAI fellow, IJCAI Trustee, in the Board of Directors for KR Inc., member of the editorial board for the Argument and Computation journal, Editorial Advisor for Theory and Practice of Logic Programming, associate editor for the AI journal, and general chair for IJCAI2026.